Kubernetes Best Practices - Part 2 - Building patterns

As the continuation to our Best practices series, let's explore further and see the other important practices based on industry standards.

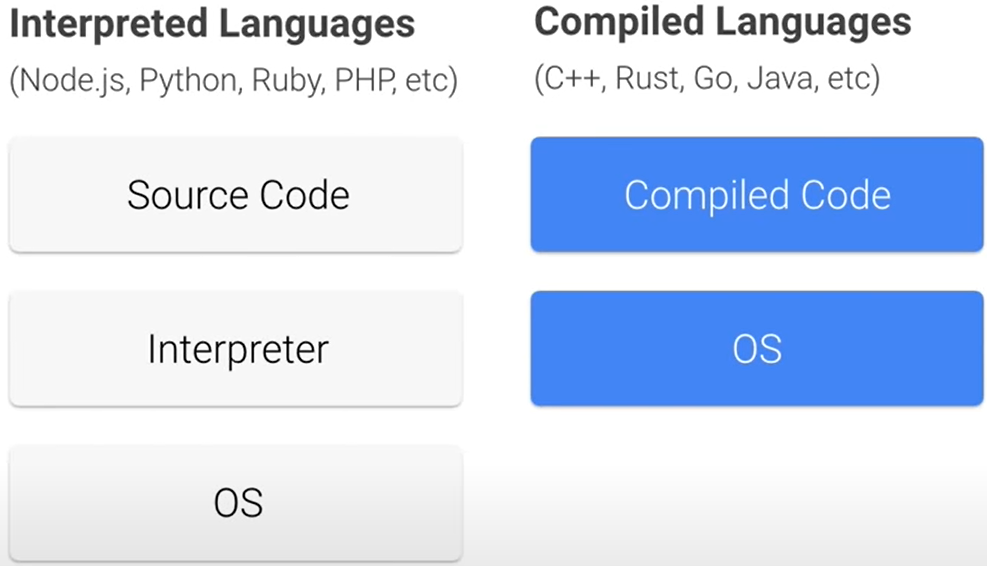

With interpretive languages, the source code is sent to an interpreter, and then it is executed directly. But with a compiled language, the source code is turned into compiled code beforehand.

Now, with compile languages, the compilation step often requires tools that are not needed to actually run the code. So this means that you can remove these tools from the final container completely.

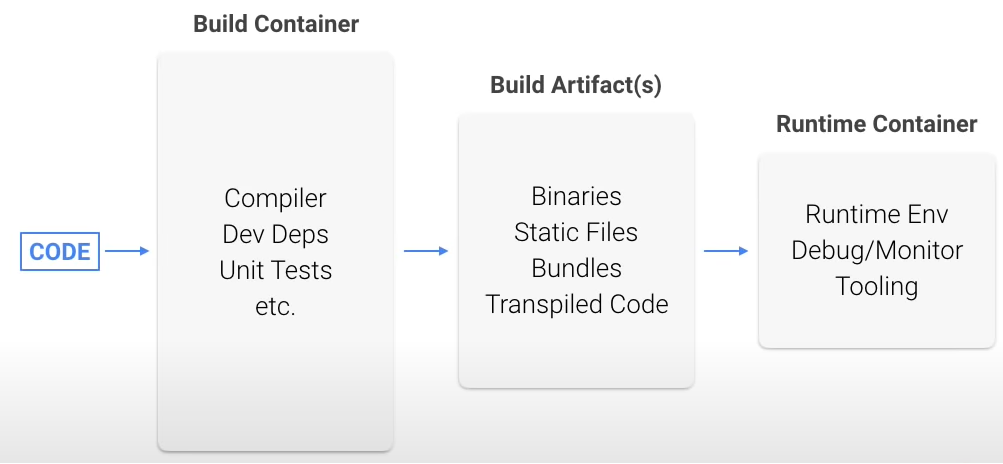

To do this, you can use the builder pattern. The code is built in the first container, and then the compiled code is packaged in the final container without all the compilers and tools required to make the compiled code.

So let's take a Go application through this process. First, let's move from the onbuild image to Alpine Linux.

In the new Docker file,

* The container starts with a golang:alpine image.

* Creates a directory for the code

* Copies the source code

* Builds the source

* Finally, starts the app.

This container is much smaller than the onbuild container, but it still contains the compiler and other Go tools that we really don't need in runtime. Let's extract just the compiled program out and put it into its own container.

FROM golang: ailpine AS build-env

WORKDIR /app

ADD . /app

RUN cd /app && go build -o goapp

FROM alpine

RUN apk update && apk ca-certificates && rm -rf /var/cache/apk/*

WORKDIR /app

COPY --from=build-env /app/goapp /app

EXPOSE 8080

ENTRYPOINT ./goappSo you might notice something strange about this Docker file. It has two FROM lines.

The first section looks exactly the same as the previous Dockerfile in Part 1, except that it uses the AS keyword to give this step a name. In the next section, there is a new FROM line. This will start a fresh image, and instead of using golang:alpine, we will use raw alpine as the base image.

Raw Alpine Linux doesn't have any SSL certificates installed, which will make most API calls over HTTPS fail. So let us install some root CA certificates.

And now comes the interesting part. You can use the COPY command to copy the compiled code from the first container into the second. This line will copy just that one file and not the rest of the Go tooling. This new multistage Docker file contains a container image that's just 12 megabytes. The original container image was 700 megabytes. That is quite a difference.

Using small base images and the builder pattern are great ways to create much smaller containers without a lot of work. Now, depending on your application stack, there may be additional ways to reduce your container image size as well.

But do small containers actually have a measurable advantage?

Let's look at two areas where small containers shine

- Performance

- Security.

Performance

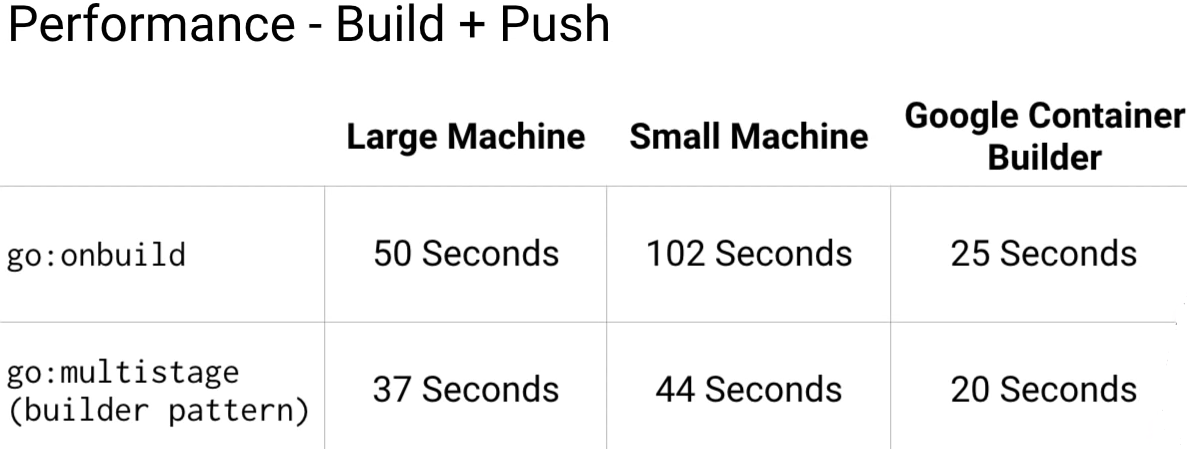

For performance, let's look at how long it takes to

* Build a container

* Push it to a registry

* and then pull it down from the registry

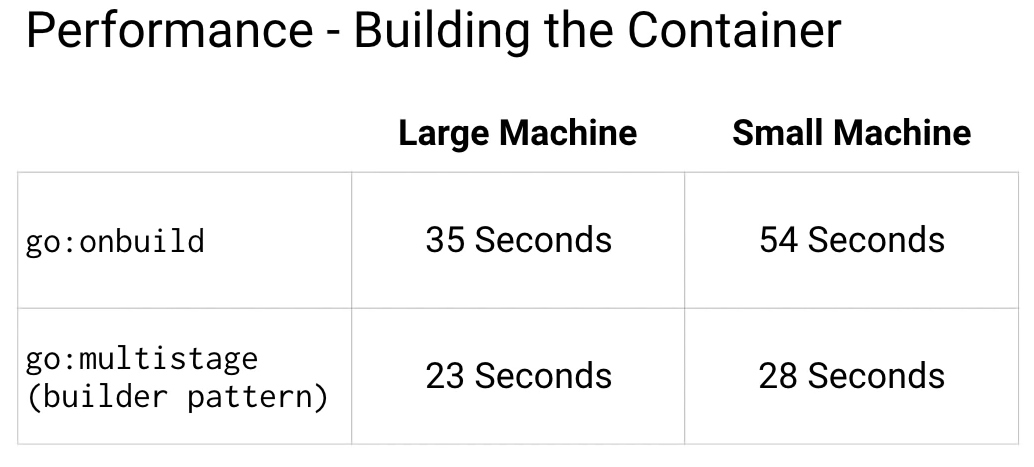

Building a container

For the initial build, you can see that the smaller container has a huge advantage over larger containers. Docker will cache layers so subsequent builds will take very little time for either.

But for many CI systems that folks use to build and test containers, they don't cache layers, so there is a significant time saving here. Just think about how many times you're building and testing your code.

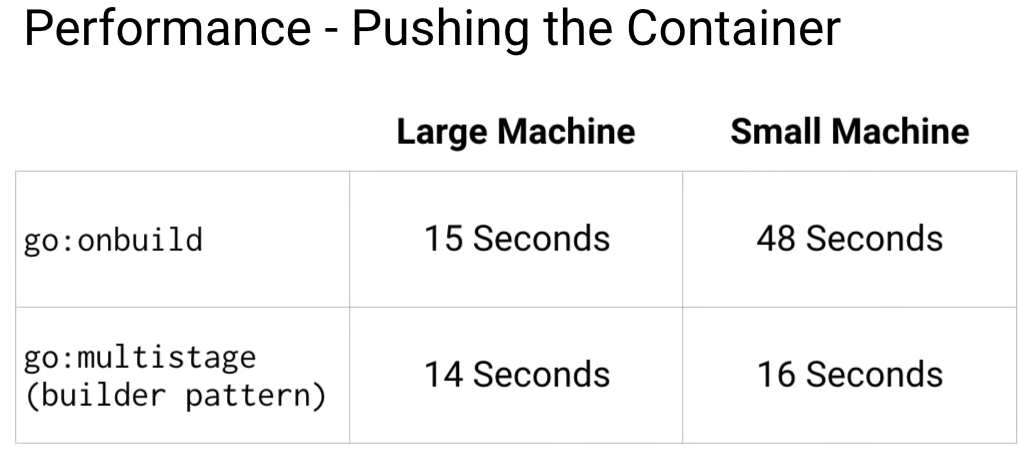

Pushing container to a registry

Now that the container is built, you need to push it to a container registry so you can use it in your Kubernetes cluster.

Google Container Registry

I recommend using the Google Container Registry because,

- You only pay for the raw storage and network.

- There is no additional fee to manage containers.

- It's private and secure, and it's lightning fast.

In fact, GCR uses many tricks to speed up pushing. You can see that the time to push both the containers for the large machine is almost the same. This is because GCR uses a global cache for common base images, meaning you don't need to upload them at all.

With the small machine, the CPU becomes the bottleneck. As you can see, there is still a significant advantage to use small containers. If you're using Google Container Registry, I highly recommend using Google Container Builder as part of your build system.

As you can see, it's much faster to build and push than even the large machine. And you get a 120 build minutes free per day, which should be enough to cover most people's container-building needs.

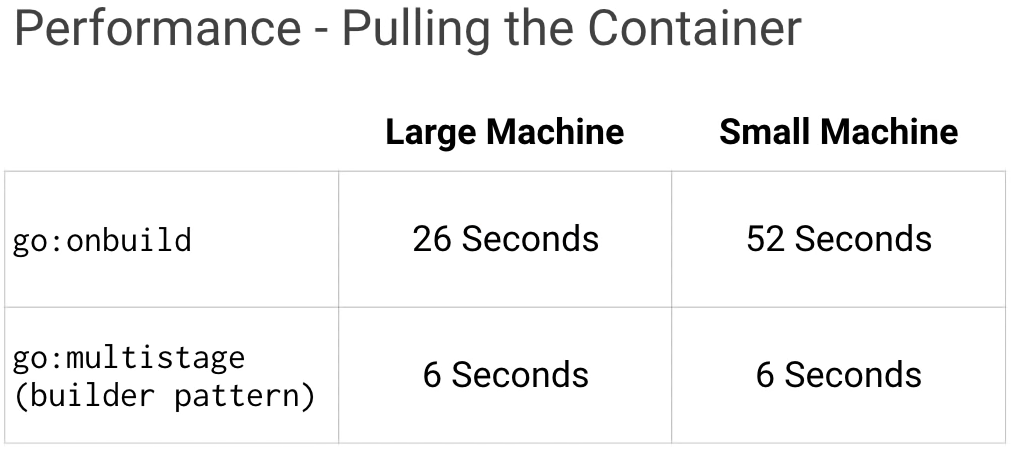

Pulling the container

While you might not care about the time it takes to build and push a container, you should really care about the time it takes to pull the container.

For example, let's say you have a three node cluster and one of the nodes crashes. If you're using a managed system like Google Kubernetes Engine, the system will automatically spin up a new node to take its place.

However, this new node will be completely fresh and will have to pull all your containers before it can start working. If it takes too long to pull the containers, this is just time where your cluster isn't performing as well as it should.

Now, there are many cases where this may occur, such as adding a new node to your cluster, upgrading your nodes, or even switching to a new container for your deployments. So minimizing pull times becomes key. You can easily tell the smaller container is much faster than the large container. And you're probably running multiple containers on your Kubernetes cluster, so these times can add up quickly.

Using small, common base images for your containers significantly speeds up the deployment times and speed at which new Kubernetes nodes can come online.